Closed captioners don’t caption sounds.

Captioners make meaning. They caption programs. They don’t caption sounds.

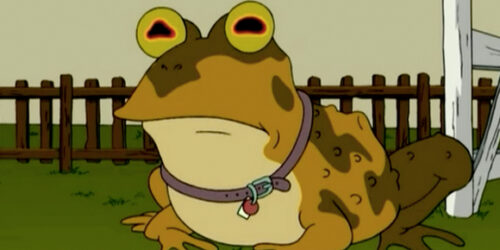

What’s the best way to caption this sound? Unless you’ve heard this sound before, or even better, have some visual clues about what contraption or entity is making the sound, or some information about the purpose of the sound in a specific context, you will probably be unable to answer this question effectively. Even having additional information about the origin of the sound may not be enough. Does it help to know that the sound is in fact a “turbine engine played backwards“? Maybe it does, but only if the visual accompaniment to the sound included an actual turbine engine, and not, as you may already know, the Hypnotoad character from the animated TV show Futurama. (Clips available on Comedy Central’s official Futurama site are not closed captioned; the video player on the site is not configured with a cc button for closed captioning functionality.)

Here’s an example of the captioned Hypnotoad in action from Season 4, Episode 6: “Bender Should Not Be Allowed on Television.” (Source: Netflix version with closed captions enabled.)

Note how the caption for the Hypnotoad sound (“Eyeballs Thrumming Loudly”) is tied directly to the visual context for the sound. The sound apparently emanates from its eyes, so that’s how the sound is captioned. (The sound is not always captioned this way when the recurring Hypnotoad character appears in other episodes. In a future post, I’ll look at the different ways in which Hypnotoad is captioned.)

Caption the show, not the sound.

Closed captioners don’t caption sounds. They caption shows. They convey contexts. They tether sounds to visuals. They make significant sounds visible. While it would be technically correct to caption the Hypnotoad sound as a “turbine engine played in reverse,” it wouldn’t be contextually correct. Captions do not simply convey the origin of a sound — or even what the sound really is in any abstract, technical, or decontextualized sense — but what the sound is doing in a scene. The apparent origin needs to be captioned (i.e. the Hypnotoad apparently produces the sound), not necessarily the actual origin (i.e. a turbine engine actually produces the sound). What this implies is that the same sound could be captioned in very different ways depending on the visual context. For example, a recording studio, an engine testing facility, and Futurama are three very different visual contexts, and yet all three could plausibly include the same engine sound, with a potentially different caption for that sound in each visual context.

Asking someone to caption a sound file in the absence of any visual cues is unfair, but I wanted to make a point by doing it. And that point is: It’s impossible (or at least perilous) to caption some sounds in isolation, especially non-speech sounds, because captioners don’t caption sounds per se. They make meaning in specific audiovisual contexts. Maybe this goes without saying but I sometimes wonder whether our definitions of closed captioning are too reductive and simplistic. Wikipedia’s definition, for example, is: “Closed captions typically show a transcription of the audio portion of a program as it occurs (either verbatim or in edited form), sometimes including non-speech elements.” Such a definition smacks of too much objectivity, in my opinion. Captioning is an art. It’s interpretation, not science. It’s not transcription. The notion of a “transcript” aligns captioning with the process of taking an audio file (e.g. a podcast or a recorded audio interview) and converting it to words. That’s not what captioning is, because the captioning of sound can not always be done in the absence of what’s happening visually on the screen. Captions are not transcriptions of sounds but an attempt to express how sounds make meaning in and support specific visual contexts.

A transcript implies a neutral recording (in words) of the entire collection of sounds in a program (the so-called “audio portion” in the definition above). But captioners don’t caption every sound, not only because there isn’t enough room in the caption track to do so while adhering to reading speed guidelines, but also because some sounds are not significant and could even distract or confuse viewers if captioned. That’s the problem with keynote sounds that are mistakenly captioned verbatim, as in this example from Knight & Day in which speech sounds that are inaudible and part of the background hum (or “key”) of the scene are captioned anyway.

Captioners don’t caption all sounds or create a transcript of the audio. They don’t even caption sounds per se, if by that we mean sounds in isolation, cut off from the purposive work sounds do in specific visual contexts.

(I had planned to devote this post to the different ways in which Hypnotoad is captioned on Futurama and then reflect on whether consistency is a problem with recurring sounds/contexts on the same show — along the lines of what I’ve done previously with “running gags” and what I call “series awareness.” But I think I’m going to save that little project for a future post.)